Ever thought about how Instagram handles millions of photo uploads every minute without breaking a sweat? Or how Netflix serves content to hundreds of millions of users simultaneously without your favourite show buffering endlessly? The secret sauce behind these high-traffic juggernauts isn’t just powerful servers—it’s database sharding for high traffic applications.

Here’s the reality: your monolithic database might be working fine now, but what happens when your user base explodes overnight? What if your single database becomes the bottleneck that crashes your entire application during peak hours? These aren’t just hypothetical scenarios—they’re nightmares that keep CTOs awake at night.

In this comprehensive guide, you’ll discover exactly how to implement database sharding for high traffic applications. Moreover, you’ll learn practical strategies that companies like Twitter and Uber use to scale their databases horizontally.

By the end of this article, you’ll understand different sharding strategies, know how to choose the right sharding key, and be equipped to avoid common pitfalls that could cripple your application’s performance. Because let’s face it—in today’s competitive tech landscape, slow applications don’t just lose users; they lose business.

Understanding the Database Scaling Problem

Before we dive into sharding, let’s address why you need it in the first place. Imagine you’re running an e-commerce platform. Initially, your single PostgreSQL database handles everything smoothly—user data, product catalogs, orders, everything. Life is good.

Then Black Friday hits. Traffic spikes 50x. Your database server maxes out its CPU and memory. Queries that took milliseconds now take seconds. Your application crawls to a halt, and users abandon their carts. You just lost millions in revenue because your database couldn’t scale.

This is where vertical scaling (adding more CPU, RAM, and storage to your existing server) hits a wall. There’s only so much hardware you can throw at a single machine. Also, vertical scaling is expensive and has physical limits. You need a different approach.

What Exactly is Database Sharding?

Database sharding is a horizontal scaling technique where you split your database into smaller, more manageable pieces called shards. Each shard is essentially an independent database that holds a subset of your total data. Think of it like distributing books across multiple library branches instead of cramming them all into one building.

Here’s a relatable example: suppose you’re building a social media app with 100 million users. Instead of storing all user data in one database, you could split it across 10 shards, with each shard handling 10 million users. When User A (on Shard 1) posts something, only Shard 1 gets hit. When User B (on Shard 5) scrolls their feed, only Shard 5 processes that request.

The beauty of sharding lies in parallel processing. Because each shard operates independently, they can handle requests simultaneously. This means your system’s capacity grows with each shard you add, allowing you to scale almost indefinitely.

Key Sharding Strategies You Need to Know

Choosing the right sharding strategy is critical because it determines how your data gets distributed and how efficiently your application can retrieve it. Let’s explore the most common approaches.

1. Range-Based Sharding

Range-based sharding divides data based on ranges of a particular value. For instance, you might shard user data alphabetically—users with names starting A-F go to Shard 1, G-L to Shard 2, and so on.

Example in action: An e-commerce platform could shard orders by date ranges. Orders from January-March live on Shard 1, April-June on Shard 2, etc. This works brilliantly for time-series data, where recent data gets accessed more frequently.

However, there’s a catch. Range-based sharding can create hotspots—situations where one shard gets disproportionate traffic. If most of your users have names starting with ‘A’ or ‘S’, those shards will be overloaded while others sit idle. Although simple to implement, you need to carefully consider your data distribution.

2. Hash-Based Sharding

Hash-based sharding uses a hash function to determine which shard stores each record. You take a sharding key (like user ID), pass it through a hash function, and the output determines the shard number.

Real-world implementation: Imagine you’re sharding a user database with 4 shards. When a new user signs up with ID 12345, you calculate: hash(12345) % 4 = 1. This user’s data goes to Shard 1. User ID 67890 might hash to Shard 3, and so on.

The major advantage here is uniform distribution. A good hash function spreads data evenly across shards, eliminating hotspots. Moreover, hash-based sharding is predictable—you can always calculate which shard contains specific data without maintaining a lookup table.

The downside? Range queries become expensive because data that’s logically sequential (like user IDs 1000-2000) gets scattered across different shards.

3. Geographic Sharding

Geographic sharding distributes data based on location. Users in North America get stored on shards located in US data centers, European users on EU shards, and Asian users on Asian shards.

Why this matters: Netflix uses geographic sharding extensively. When you’re streaming in Mumbai, your data comes from Asian servers, reducing latency dramatically. Also, this approach helps with data residency laws—keeping EU citizen data within EU borders to comply with GDPR.

This strategy shines for global applications because it minimizes network latency. However, it requires more complex infrastructure across multiple regions.

4. Directory-Based Sharding

Directory-based sharding maintains a lookup table (directory) that maps each piece of data to its shard. Think of it as a phonebook that tells you exactly where to find each record.

Practical example: In a multi-tenant SaaS application, you could maintain a directory mapping each company (tenant) to their shard. Company A’s data is on Shard 2, Company B is on Shard 5, etc. This gives you ultimate flexibility because you can rebalance data by simply updating the directory.

Although this approach offers maximum flexibility, the directory itself can become a bottleneck and a single point of failure. You’ll need to cache it aggressively and possibly replicate it across multiple nodes.

Choosing the Perfect Sharding Key

Your sharding key is arguably the most critical decision in your sharding implementation because it determines how data gets distributed. A poor choice can doom your entire architecture.

What Makes a Good Sharding Key?

First, your sharding key should have high cardinality—meaning lots of unique values. User ID, email, or order ID are excellent choices because they’re unique. Sharding by gender (only 2-3 values) would be disastrous.

Second, consider query patterns. If 80% of your queries filter by user ID, then user ID is probably your best sharding key. Because when your sharding key matches your query patterns, you can route requests to a single shard instead of broadcasting to all shards.

Third, ensure even distribution. Your sharding key should spread data uniformly across shards. Sharding an e-commerce platform by product category might seem logical, but if “Electronics” has 10x more products than “Books,” you’ll have severely imbalanced shards.

Real scenario: Uber likely shards their ride data by geographic region or city. Why? Because most queries are location-specific—finding nearby drivers, calculating ETAs, showing ride history for a location. Moreover, this naturally distributes load because ride density varies by city.

Step-by-Step Implementation Guide

Let’s walk through implementing hash-based sharding for a user management system. I’ll use practical examples with PostgreSQL, although the concepts apply to any database.

Step 1: Design Your Shard Architecture

Start by determining how many shards you need. Consider your current data size, growth projections, and query load. A good rule of thumb is to plan for 2-3 years of growth.

Example calculation: If you have 10 million users now, expect 50 million in 3 years, and want each shard handling roughly 5 million users, you’d need 10 shards. Always round up and add buffer capacity because re-sharding later is painful.

Step 2: Set Up Your Database Instances

Create separate database instances for each shard. In production, these should be on different physical servers or cloud instances. Also, configure replication for each shard to ensure high availability.

Shard 0: users-db-shard-0.example.com

Shard 1: users-db-shard-1.example.com

Shard 2: users-db-shard-2.example.com

...Each shard has identical schema but contains different data subsets.

Step 3: Implement Routing Logic

Your application needs logic to route queries to the correct shard. Here’s a simplified example in Python:

import hashlib

class ShardRouter:

def __init__(self, shard_count):

self.shard_count = shard_count

def get_shard_id(self, user_id):

# Hash the user_id and determine shard

hash_value = int(hashlib.md5(str(user_id).encode()).hexdigest(), 16)

return hash_value % self.shard_countWhen a user logs in with ID 42, you calculate get_shard_id(42), which returns, say, Shard 3. Your application then routes all queries for that user to Shard 3’s database connection.

Step 4: Handle Cross-Shard Queries

Sometimes you’ll need data from multiple shards. For instance, generating a global leaderboard or searching users across all shards. These cross-shard queries require special handling.

Strategy 1: Scatter-gather approach. Send the query to all shards, collect results, and merge them in your application layer. Although this works, it’s slow and should be avoided for frequent operations.

Strategy 2: Maintain a separate global database for cross-shard queries. For our leaderboard example, you could maintain a denormalized leaderboard table that aggregates data from all shards periodically. Yes, this adds complexity, but it’s far more performant.

Step 5: Implement Shard Migration Tools

You’ll eventually need to rebalance shards or add new ones. Build tools early that can migrate data between shards with minimal downtime. Moreover, these tools should be well-tested because shard migrations are high-risk operations.

Critical Challenges and How to Overcome Them

Here is a list of some critical challenges that you would be facing while implementing database sharding for high traffic applications. Keep reading to learn about them and how you can overcome them.

Challenge 1: Distributed Transactions

Transactions spanning multiple shards are problematic. If a user transfers money to someone on a different shard, you need both updates to succeed or both to fail. Single-database ACID guarantees don’t work across shards.

Solution: Implement the Two-Phase Commit protocol or, better yet, redesign your application to avoid cross-shard transactions. Many companies choose eventual consistency over distributed transactions because it’s simpler and more scalable.

Challenge 2: Hotspots and Uneven Load

Even with careful planning, some shards might get more traffic than others. Perhaps certain users or geographic regions are more active.

Solution: Monitor shard performance continuously. When you detect hotspots, you have several options: split hot shards into smaller ones, move high-traffic customers to dedicated shards, or implement read replicas for the overloaded shards. Also, consider adding a caching layer (like Redis) in front of hot shards.

Challenge 3: Operational Complexity

Managing 10 databases is inherently more complex than managing one. Backups, monitoring, schema migrations—everything gets multiplied.

Solution: Invest heavily in automation and tooling. Infrastructure-as-Code tools like Terraform can provision shards consistently. Database migration tools like Flyway or Liquibase can apply schema changes across all shards simultaneously. Moreover, centralized monitoring with tools like Datadog or Prometheus becomes essential.

Sharding Alternatives Worth Considering

Before committing to sharding, explore whether these alternatives might solve your scaling problems with less complexity.

- Read replicas: If your bottleneck is primarily read traffic, creating read replicas of your database might suffice. This is simpler than sharding and works brilliantly for read-heavy applications.

- Caching: Implementing aggressive caching with Redis or Memcached can reduce database load by 80-90% for many applications. Although caching doesn’t eliminate the need for eventual scaling, it might delay it significantly.

- Vertical scaling: Modern cloud providers offer massive database instances. An AWS RDS db.r6g.16xlarge instance has 512GB RAM and 64 vCPUs. If this handles your load, it’s far simpler than sharding.

That said, if you’re truly dealing with high-traffic applications handling millions of requests, sharding eventually becomes inevitable.

Real-World Success Stories

Instagram sharded their database early in their growth journey, which enabled them to scale from thousands to billions of users. They use hash-based sharding with user ID as the sharding key, allowing them to distribute data evenly and route queries efficiently.

Discord shards their message data using a combination of channel ID and time-based partitioning. This approach works perfectly for their use case because messages are queried by channel, and recent messages get accessed far more frequently than old ones.

These companies didn’t start with sharding, though. They began with monolithic databases and migrated to sharding when scaling demands necessitated it. This gradual approach allowed them to learn their traffic patterns and make informed sharding decisions.

Final Thoughts: Is Sharding Right for You?

Database sharding for high traffic applications is powerful but complex. Implement it when you’ve exhausted simpler scaling options and your traffic genuinely demands it. Because premature sharding adds unnecessary complexity that could slow your development velocity.

Start by thoroughly understanding your query patterns, data distribution, and growth projections. Choose a sharding strategy that aligns with how your application actually works, not just what sounds theoretically elegant. Moreover, invest in robust monitoring and automation from day one—you’ll thank yourself later.

Remember, companies like Facebook and Twitter didn’t build perfectly sharded systems on day one. They evolved their architectures as they grew. Your sharding implementation should be similarly pragmatic—start simple, measure carefully, and scale intelligently as your traffic demands it.

Struggling to Grasp System Design Concepts?

You’re not alone. Many CS graduates find System Design intimidating – from understanding load balancing to designing scalable architectures. The theory seems complex, and real-world applications feel out of reach.

But here’s the good news: Techarticle breaks down complex System Design topics into digestible, practical guides that actually make sense.

Continue Your System Design Journey:

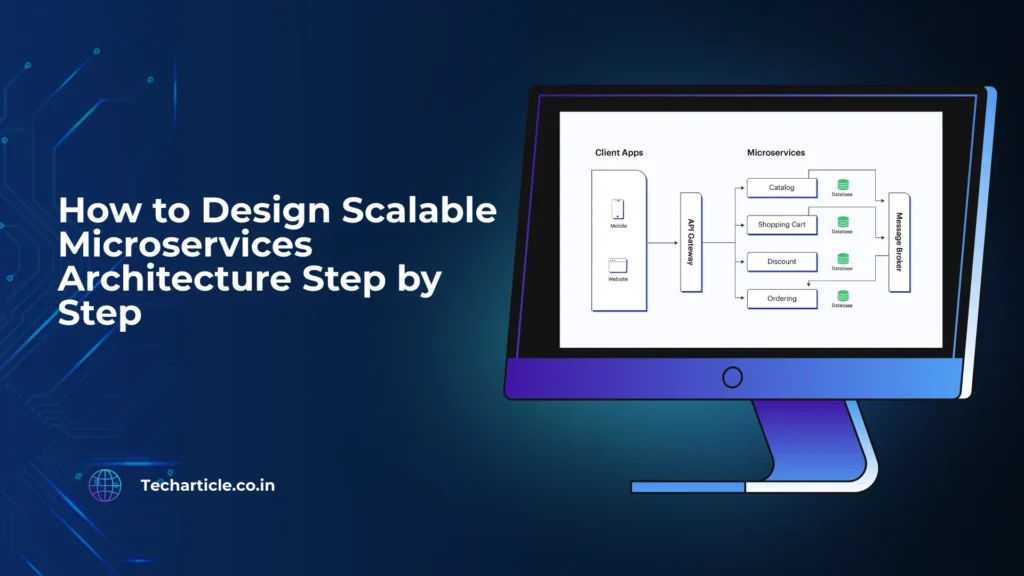

How to Design Scalable Microservices Architecture Step by Step