Understanding Algorithms: A Beginner’s Guide to the Core of Programming

A

lgorithms are an essential skill for anyone interested in computer science and programming. Algorithms are the building blocks of programming, and they are used to solve problems and perform specific tasks. Understanding how algorithms work and how to decode them is critical for anyone who wants to become a programmer or software developer.Fundamentals of algorithms involve three primary building blocks: sequencing, selection, and iteration. Sequencing refers to the sequential execution of operations, selection is the decision to execute one operation versus another operation, and iteration involves repeating the same operations a certain number of times or until a specific condition is met. These building blocks form the foundation of all algorithms, and understanding them is critical for decoding more complex algorithms.

Data structures play a crucial role in decoding algorithms. Data structures are used to organize and store data in a way that makes it easier to access and manipulate. Different data structures are used for different purposes, and understanding which data structure to use in different situations is a crucial part of decoding algorithms. By understanding the fundamentals of decoding algorithms and the role of data structures, anyone can become a proficient programmer.

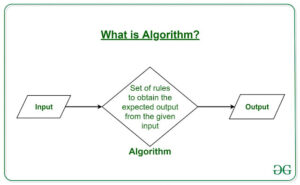

Introduction to Algorithms

Algorithms are a set of instructions that computer programs follow to convert encoded data into a readable format. The purpose of algorithms is to make it possible for humans to read and understand data that has been encoded for security or efficiency purposes. Algorithms are used in various fields such as computer science, telecommunications, medicine, and finance.

Algorithms play an essential role in data transmission and storage. For example, when a user sends an email, the message is first encoded to ensure that it is secure during transmission. The recipient’s email client then uses a decoding algorithm to convert the encoded message back into a readable format.

Different Types of Algorithms

In the digital age, algorithms are the invisible engines powering our everyday technology. From predicting what movie you might like next to routing your GPS, algorithms are integral to our lives. Understanding the different types of algorithms not only demystifies how technology works but also empowers us to harness their potential in various fields.

Classification by Purpose

l Sorting Algorithms: These arrange data in a particular order. Examples include bubble sort, quick sort, and merge sort.

l Searching Algorithms: These are used to find specific data within a structure. Examples include linear search and binary search.

l Compression Algorithms: These reduce the size of data for storage efficiency. Examples include Huffman coding and LZW compression.

l Encryption Algorithms: These secure data by transforming it into an unreadable format. Examples include RSA and AES algorithms.

Classification by Approach

l Divide and Conquer: This approach breaks a problem into smaller sub-problems, solves each sub-problem, and then combines the solutions. Quick sort and merge sort are prime examples.

l Dynamic Programming: This method solves complex problems by breaking them down into simpler sub-problems and solving each sub-problem just once. Examples include the Fibonacci sequence and the Knapsack problem.

l Greedy Algorithms: These make the locally optimal choice at each stage with the hope of finding the global optimum. Examples include Huffman coding and the activity selection problem.

l Backtracking: This technique involves trying out different solutions and backtracking upon failure. Examples include the N-Queens problem and Sudoku solvers.

Understanding these algorithms opens up a world of possibilities. Whether you’re a budding coder or a tech enthusiast, knowing how these algorithms work can help you develop better software, solve complex problems, and innovate in your field. Dive deeper into the world of algorithms to unlock their full potential and revolutionize the way you approach problem-solving.

Components of an Algorithm

Every algorithm has basic components:

1. Input: The data given to the algorithm.

2. Output: The result produced by the algorithm.

3. Steps/Processes: The detailed steps to convert input into output.

4. Efficiency and Performance: How well the algorithm performs, typically measured in time complexity (speed) and space complexity (memory usage).

Common Algorithm Techniques

Algorithms are the backbone of problem-solving in technology, weaving complexity into simplicity across various domains. Understanding common algorithm techniques empowers developers and researchers to devise solutions that are not only effective but also efficient. Here’s an insight into some of the most prevalent methods:

a. Brute Force: Starting with the simplest, the brute force method checks every possible scenario to find a solution. It’s straightforward but can be slow, making it a basic yet crucial technique.

b. Divide and Conquer: This method breaks a large problem into smaller, manageable sub-problems, solves each one individually, and then combines them for the final solution. It’s particularly effective for sorting and searching tasks.

c. Greedy Algorithms: Greedy algorithms make the best possible choice at each step with the hope of finding the global optimum. It’s quick and easy to implement but doesn’t always guarantee the best overall solution.

d. Dynamic Programming: By breaking problems down into overlapping sub-problems, solving them once, and storing their solutions, dynamic programming avoids redundant work and speeds up the process, particularly useful for optimization problems.

e. Backtracking: This technique systematically tries to build a solution incrementally, abandoning a path as soon as it determines that this path cannot possibly lead to a viable solution.

f. Randomized Algorithms: These introduce a chance element into the decision process, which can lead to faster and more efficient solutions, especially for complex problems where deterministic solutions are cumbersome.

g. Parallel Algorithms: By dividing the task into parts that can be executed simultaneously, parallel algorithms leverage modern multi-core processors to enhance performance and efficiency.

h. Branch and Bound: This technique uses branching to explore the solution spaces and bounding to limit the search space based on the best solution found so far. It’s effective for optimization problems where finding the best solution is crucial.

These techniques form the crux of algorithm design, each suited to different types of problems and essential for the toolkit of anyone involved in computer science and problem-solving. Understanding and applying these methods can significantly enhance the ability to tackle complex challenges efficiently.

Algorithm Design and Analysis

Designing and analyzing algorithms is a crucial part of computer science. The design involves creating the algorithm, while analysis involves evaluating its efficiency using metrics like Big O notation, which describes the upper bound of the algorithm’s running time. Space complexity refers to the amount of memory an algorithm needs.

Sorting Algorithms

Sorting algorithms are fundamental to computer science. Here are a few common ones:

l Bubble Sort: A simple comparison-based algorithm where adjacent elements are swapped if they are in the wrong order. It’s easy to implement but inefficient for large lists.

l Quick Sort: A divide and conquer algorithm that selects a ‘pivot’ and partitions the array around the pivot. It’s much faster than bubble sort for large datasets.

l Merge Sort: Another divide and conquer algorithm that divides the list into halves, sorts them, and then merges them back together.

l Insertion Sort: Builds the final sorted array one item at a time, with the insertion of each item into its correct position.

l Heap Sort: Converts the array into a heap data structure and then repeatedly extracts the maximum element.

Searching Algorithms

Searching algorithms find the position of a target value within a list.

l Linear Search: Checks each element until the target is found or the list ends.

l Binary Search: Efficiently searches a sorted list by repeatedly dividing the search interval in half.

l Depth-First Search (DFS): Explores a graph by going as deep as possible down one branch before backtracking.

l Breadth-First Search (BFS): Explores all neighbors at the present depth before moving on to nodes at the next depth level.

Graph Algorithms

Graphs represent networks of nodes connected by edges. Common graph algorithms include:

l Dijkstra’s Algorithm: Finds the shortest path from a source to all other nodes in a graph with non-negative weights.

l Bellman-Ford Algorithm: Also finds the shortest path, but can handle graphs with negative weights.

l Floyd-Warshall Algorithm: Computes shortest paths between all pairs of nodes.

l Kruskal’s Algorithm: Finds the minimum spanning tree for a graph.

l Prim’s Algorithm: Also finds the minimum spanning tree, but works differently than Kruskal’s.

String Algorithms

String algorithms process sequences of characters.

l Knuth-Morris-Pratt (KMP) Algorithm: Efficiently finds occurrences of a pattern within a text.

l Boyer-Moore Algorithm: Skips sections of the text to find the pattern faster.

l Rabin-Karp Algorithm: Uses hashing to find patterns in text.

Dynamic Programming Algorithms

Dynamic programming solves problems by breaking them down into simpler sub-problems and storing the results to avoid redundant work.

l Fibonacci Sequence: A classic example where each number is the sum of the two preceding ones.

l Knapsack Problem: Determines the most valuable combination of items that can be carried in a knapsack.

l Longest Common Subsequence: Finds the longest subsequence common to two sequences.

Greedy Algorithms

Greedy algorithms build up a solution piece by piece, always choosing the next piece that offers the most immediate benefit.

l Activity Selection: Selects the maximum number of activities that don’t overlap.

l Huffman Coding: An optimal way of encoding data based on frequency.

Algorithm Complexity and Performance

Time Complexity

Time complexity measures the time taken by an algorithm to run as the input size grows, influencing the efficiency and performance of the algorithm. The time complexity of an algorithm is usually expressed using the Big O notation, which provides an upper bound on the growth rate of the algorithm’s running time as the input size increases.

The following table shows the common time complexities of different types of algorithms:

Algorithm Time Complexity

Linear Search O(n)

Binary Search O(log n)

Bubble Sort O(n^2)

Merge Sort O(n log n)

Quick Sort O(n log n)

Dynamic Programming O(n^2)

Space Complexity

Space complexity quantifies the total memory needed by an algorithm in relation to the input size, including fixed space for variables and instructions. The space complexity of an algorithm is usually expressed using the Big O notation, which provides an upper bound on the growth rate of the algorithm’s memory usage as the input size increases.

The following table shows the common space complexities of different types of algorithms:

Algorithm Space Complexity

Linear Search O(1)

Binary Search O(1)

Bubble Sort O(1)

Merge Sort O(n)

Quick Sort O(log n)

Dynamic Programming O(n)

Understanding the time and space complexity of an algorithm is crucial in determining its efficiency and performance. It allows developers to measure the efficiency of their algorithm to optimize resource and time usage.

Follow the link if you are an aspiring software engineer and want to dive deeper into the concepts of data structures and algorithms.

Use of Algorithms in Different Programming Paradigms

Algorithms play a pivotal role in programming, adapting their form and function across various programming paradigms to solve diverse problems efficiently. Each paradigm utilizes algorithms differently, shaping the way data is manipulated and tasks are executed.

1. Imperative Programming: This paradigm involves explicitly detailing the steps a program must take to reach a desired state. Algorithms in this context are step-by-step instructions that manipulate program state through variables and control structures like loops and conditionals.

2. Procedural Programming: An extension of imperative programming, this paradigm breaks down tasks into procedure calls or subroutines, making algorithms modular and reusable. This approach helps in tracking program flow in a structured, top-down methodology.

3. Object-Oriented Programming (OOP): Algorithms in OOP are encapsulated within objects, which combine data and methods. This paradigm focuses on designing algorithms around entities known as objects, which interact with one another, emphasizing modularity and reusability.

4. Functional Programming: Here, algorithms are constructed using pure functions without side effects. This paradigm treats functions as first-class citizens and uses high-order functions, promoting a declarative style where the focus is on what to solve rather than how to solve it.

5. Logical Programming: Algorithms express logical relations and are executed to satisfy certain conditions or rules. This paradigm uses a declarative approach, where algorithms define relationships and constraints, leaving the computational model to satisfy these conditions without specifying the exact steps.

6. Declarative Programming: In contrast to imperative programming, declarative paradigms focus on the logic of computation without describing its control flow. Algorithms in this paradigm express the logic of a computation without describing its control flow.

Each programming paradigm offers unique advantages in handling algorithms, depending on the nature of the problem and the required solution. Understanding these differences can significantly enhance a programmer’s ability to select the most appropriate paradigm for a particular task, ensuring more efficient and effective problem-solving strategies.

Applications of algorithms in real world

In today’s digital world, algorithms are the silent heroes transforming our everyday lives. From the moment we wake up to the time we go to bed, algorithms play a crucial role in making our lives more convenient, efficient, and connected. Understanding the applications of algorithms in the real world reveals the vast impact they have across various industries and daily activities.

Key Applications of Algorithms include:

1. Healthcare: Algorithms are revolutionizing healthcare by enabling precise diagnostics, personalized treatments, and efficient patient management. Machine learning algorithms analyze medical images to detect diseases early, while predictive algorithms help in forecasting outbreaks and managing healthcare resources effectively.

2. Finance: In the financial sector, algorithms are essential for trading, fraud detection, and risk management. High-frequency trading algorithms execute trades at lightning speed, maximizing profits. Fraud detection algorithms analyze transaction patterns to identify and prevent fraudulent activities in real time.

3. Transportation: Algorithms optimize routes for navigation systems, making travel more efficient. They are used in ride-sharing apps to match drivers with passengers and in logistics to streamline delivery routes. Autonomous vehicles rely on complex algorithms to navigate and make real-time decisions.

4. Entertainment: Streaming services like Netflix and Spotify use recommendation algorithms to suggest content tailored to individual preferences. These algorithms analyze viewing and listening habits to provide a personalized entertainment experience, keeping users engaged.

5. Retail: E-commerce platforms use algorithms for inventory management, personalized marketing, and pricing strategies. Recommendation algorithms suggest products based on user behavior, increasing sales and customer satisfaction.

6. Social Media: Algorithms curate news feeds, suggest friends, and recommend content on platforms like Facebook, Twitter, and Instagram. They analyze user interactions to deliver personalized content, enhancing user engagement and satisfaction.

By exploring these real-world applications, we can appreciate how algorithms drive innovation and efficiency. They are the backbone of modern technology, influencing decisions and enhancing experiences in every aspect of our lives. Dive into the world of algorithms and discover how they shape the present and future of our society.

Key Takeaways

l Decoding algorithms involves understanding the building blocks of sequencing, selection, and iteration.

l Data structures play a critical role in organizing and manipulating data to decode algorithms.

l Proficiency in decoding algorithms is essential for anyone interested in becoming a programmer or software developer.

Frequently Asked Questions

Q1. What are the fundamental constructs of algorithms in programming?

The fundamental constructs of algorithms in programming are sequencing, selection, and iteration. Sequencing refers to the order in which instructions are executed. Selection is the decision-making process that allows the program to choose between one action or another based on certain conditions. Iteration refers to the process of repeating a set of instructions a certain number of times or until a particular condition is met.

Q2. Can you provide an example of a sequence algorithm?

An example of a sequence algorithm is a program that calculates the area of a rectangle. The program would first prompt the user to enter the length and width of the rectangle, then it would multiply these two values to find the area.

Q3. How do sequencing, selection, and iteration function in computer science?

Sequencing, selection, and iteration are the building blocks of algorithms in computer science. Sequencing refers to the order in which instructions are executed. Selection allows the program to make decisions based on certain conditions. Iteration allows the program to repeat a set of instructions a certain number of times or until a particular condition is met.

Q4. In what ways are selection structures utilized within programming?

Selection structures are utilized within programming to make decisions based on certain conditions. For example, an if statement is a selection structure that allows the program to execute one set of instructions if a certain condition is true, and another set of instructions if the condition is false.

Q5. What constitutes the basic elements of a computer program?

The basic elements of a computer program include input, processing, and output. Input refers to the data that the program receives, processing refers to the operations that the program performs on the input data, and output refers to the results that the program produces.

Q6. What steps are involved in decoding a complex algorithm?

Decoding a complex algorithm involves breaking it down into smaller, more manageable pieces. This can be done by identifying the fundamental constructs of the algorithm, such as sequencing, selection, and iteration. Once these constructs have been identified, the programmer can begin to analyze each component and determine how it contributes to the overall algorithm.

Q7. What type of algorithm works by looping through a block of code until a condition is false?

An algorithm that works by repeating a set of instructions until a condition is no longer true is called a “looping algorithm.” It keeps going over the same block of code until the condition specified becomes false. This type of algorithm is commonly used in programming to automate repetitive tasks or to iterate through data until a specific condition is met.