First-In, First-Out: Understanding Queue FIFO Data Structure

Introduction

Have you ever waited in line at a busy restaurant or a theme park? Chances are, you’ve experienced the concept of queue FIFO data structure without even realizing it. This concept is not only applicable to everyday scenarios but also plays a crucial role in computer science and programming. In this article, we’ll dive into the fascinating world of First-In, First-Out (FIFO) data structures, specifically focusing on queues.

What is a Queue?

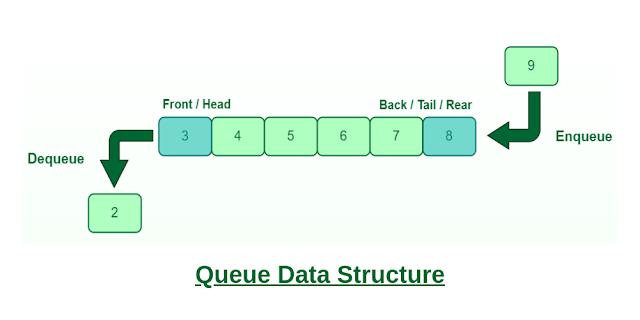

A queue is a linear data structure that follows the First-In, First-Out (FIFO) principle. It can be visualized as a line of people waiting for a service, where the person who arrives first gets served first. Similarly, in a queue data structure, the element that is inserted first is the first one to be removed.

Queues are widely used in computer science and can be implemented using arrays or linked lists. They are often employed in scenarios where data needs to be processed in the same order it was received. From operating systems to network communication protocols, queues find applications in various domains.

Image Source: GeeksforGeeks

Why Use a Queue?

You might wonder, why not just use a regular list to store and retrieve elements? Well, queues offer several advantages that make them suitable for specific situations:

Order Preservation: Queues maintain the order of elements, ensuring that the first element inserted is the first one to be removed. This characteristic is crucial when sequentially processing data.

Efficient Insertion and Removal: Queue operations, such as enqueue (insertion) and dequeue (removal), have a time complexity of O(1) in most implementations. This efficiency makes queues ideal for scenarios where fast insertion and removal are required.

Synchronization: Queues also play a vital role in multi-threaded programming and parallel processing. They provide a synchronized way of handling shared resources among multiple threads or processes.

Now that we understand the basics of queues, let’s explore how they work and how they can be implemented.

How Does a Queue Work?

A queue operates on the principle of First-In, First-Out (FIFO), which means the element that enters the queue first will be the first one to leave. The operations commonly associated with queues are:

l Enqueue: This operation adds an element to the end of the queue, also known as the rear. Think of it as a person joining the back of a line.

l Dequeue: The dequeue operation removes the element from the front of the queue, also known as the front. It simulates the person at the front of the line being served and leaving.

l Peek: Peek allows us to examine the element at the front of the queue without removing it. It is useful when we want to check the next item to be processed.

To implement a queue, we can use either an array or a linked list. Let’s explore both approaches and see their advantages and disadvantages.

Implementing a Queue Using an Array in Java

One way to implement a queue is by using an array. In this approach, we maintain two pointers, front and rear, that keep track of the elements. The front pointer points to the first element in the queue, while the rear pointer points to the last element.

When we enqueue an element, we increment the rear pointer and insert the new element at the rear position. On the other hand, when we dequeue an element, we increment the front pointer and remove the element from the front position.

The advantage of using an array to implement a queue is its simplicity and efficient memory usage. However, there is a limitation—the queue’s size is fixed and cannot be dynamically adjusted. If the queue becomes full, we can no longer enqueue new elements, even if there is available space at the front.

Image Source: Wikipedia

1. Declare the necessary variables:

int maxSize; // Maximum size of the queue

int[] queueArr; // Array to store the elements

int front; // Front pointer

int rear; // Rear pointer

int currentSize; // Current size of the queue

2. Initialize the queue with the desired maximum size:

public Queue(int size) {

maxSize = size;

queueArr = new int[maxSize];

front = 0;

rear = -1;

currentSize = 0;

}

3. Implement the enqueue operation to insert an element into the queue:

public void enqueue(int data) {

if (rear == maxSize – 1) {

System.out.println(“Queue is full. Unable to enqueue element.”);

return;

}

queueArr[++rear] = data;

currentSize++;

}

4. Implement the dequeue operation to remove an element from the queue:

public int dequeue() {

if (isEmpty()) {

System.out.println(“Queue is empty. Unable to dequeue element.”);

return -1;

}

int removedItem = queueArr[front++];

currentSize–;

return removedItem;

}

5. Implement the isEmpty method to check if the queue is empty:

public boolean isEmpty() {

return currentSize == 0;

}

6. Implement the isFull method to check if the queue is full:

public boolean isFull() {

return currentSize == maxSize;

}

With these implementations, you now have a working queue data structure using an array in Java.

Implementing a Queue Using a Linked List in Java

Another popular method to implement a queue is by using a linked list. In this approach, each element in the queue is represented by a node that contains the data and a pointer to the next node.

To enqueue an element, we create a new node and make it the new rear. The rear node’s next pointer will point to the new node, effectively adding it to the end of the queue.

When dequeuing an element, we simply remove the node pointed to by the front pointer and update the front pointer to the next node.

The main advantage of using a linked list for implementing a queue is its dynamic nature. Unlike an array-based queue, a linked list can grow and shrink as needed, allowing for flexibility in handling varying amounts of data.

1. Define the Node class to represent each element in the queue:

class Node {

int data; // Data of the node

Node next; // Reference to the next node

public Node(int data) {

this.data = data;

this.next = null;

}

}

2. Declare the necessary variables:

Node front; // Front pointer

Node rear; // Rear pointer

int size; // Current size of the queue

3. Implement the enqueue operation to insert an element into the queue:

public void enqueue(int data) {

Node newNode = new Node(data);

if (isEmpty()) {

front = newNode;

} else {

rear.next = newNode;

}

rear = newNode;

size++;

}

4. Implement the dequeue operation to remove an element from the queue:

public int dequeue() {

if (isEmpty()) {

System.out.println(“Queue is empty. Unable to dequeue element.”);

return -1;

}

int removedItem = front.data;

front = front.next;

if (front == null) {

rear = null;

}

size–;

return removedItem;

}

5. Implement the isEmpty method to check if the queue is empty:

public boolean isEmpty() {

return size == 0;

}

6. Implement the getSize method to get the current size of the queue:

public int getSize() {

return size;

}

Real-Life Examples of FIFO Queues

Image Source: Masai School

FIFO queues find applications in various real-life scenarios. Let’s explore a few examples where queues are commonly used.

l Supermarket Checkout: Supermarkets often employ FIFO queues at their checkout counters. The customers join a single file, and the cashier serves them one by one in the order they arrived.

l Print Spooler: When multiple users send print requests to a shared printer, a print spooler manages these requests in a queue. The printer serves each print job in the order it was received, ensuring fairness and orderliness.

l Task Scheduling: In operating systems, task scheduling algorithms utilize queues to manage processes. Different queues prioritize processes based on their importance and allocate CPU time accordingly.

l Burger Chain Drive-Thru: Drive-thru lanes at fast-food restaurants implement queues to handle incoming orders. The cars line up, and the staff takes orders and serves meals in the order the cars arrived.

These are just a few instances where FIFO queues are employed in real-life scenarios. Their ability to maintain order and ensure fairness makes them an essential tool for efficient resource allocation.

Advantages and Disadvantages of FIFO Queues

Like any data structure, FIFO data structure have their advantages and disadvantages. Let’s take a closer look at both sides:

Advantages

l Order Preservation: FIFO queues maintain the order of elements, making them suitable for scenarios where processing order matters.

l Efficient Operations: Enqueue and dequeue operations in a queue are generally efficient, with a time complexity of O(1) in most implementations.

l Flexibility: Implementing a queue using a linked list allows for dynamic resizing, enabling the queue to grow or shrink based on the data volume.

Disadvantages

l Limited Capacity: If implemented using an array, FIFO queues have a fixed capacity. Once the queue is full, new elements cannot be added, even if there is available space at the front.

l Random Access Limitation: FIFO queues do not support random access to elements. To access an element at a specific position, we need to dequeue elements until we reach the desired position.

Despite these limitations, queue first in first out remains a valuable data structure in many scenarios, enabling efficient and ordered processing of data.

FAQs on Understanding Queue FIFO Data Structure

Q1. What is the difference between a stack and a queue?

A stack is another popular data structure that follows the Last-In, First-Out (LIFO) principle. While a queue operates on the FIFO principle, a stack works oppositely—the last element inserted is the first one to be removed.

Q2. Can we implement a queue using a stack?

Yes, it is possible to implement a queue using two stacks. This approach, known as the “two-stack method,” allows us to simulate the FIFO behavior of a queue using the LIFO behavior of stacks.

To implement a queue using stacks, we use one stack for enqueue operations and another stack for dequeue operations. When we enqueue an element, we simply push it onto the first stack. When we need to dequeue an element, we transfer all elements from the first stack to the second stack, effectively reversing their order. Then, we pop the top element from the second stack, which corresponds to the oldest element in the original queue.

Q3. Are queues thread-safe?

Thread safety depends on the implementation of the queue. In a single-threaded environment, where only one thread accesses the queue, there is no need for synchronization. However, in a multi-threaded environment where multiple threads access the queue simultaneously, additional measures need to be taken to ensure thread safety.

Q4. What are some common synchronization techniques used with queues?

To achieve thread safety in multi-threaded environments, several synchronization techniques can be used, such as:

Locks: Using locks, such as mutexes or semaphores, to allow only one thread to access the queue at a time.

Atomic Operations: Utilizing atomic operations provided by the programming language to ensure indivisible access to shared resources.

Thread-Safe Implementations: Some programming languages offer built-in thread-safe queue implementations that handle synchronization internally.

Q5. Can a queue be empty and full at the same time?

No, a queue cannot be empty and full simultaneously. If a queue is empty, it means there are no elements in it. Conversely, if a queue is full, it means it has reached its maximum capacity and cannot accommodate additional elements.

Q6. What happens when we try to enqueue an element into a full queue?

When attempting to enqueue an element into a full queue, we encounter an overflow condition. Depending on the implementation, the behavior may vary. In some cases, an error or exception is raised, indicating that the operation failed. In other cases, the element may be discarded or the queue’s size may be automatically increased to accommodate the new element.

Q7. Can we remove an element from the middle of a queue?

No, FIFO queues do not support the removal of elements from the middle. To remove an element, we must dequeue elements from the front until we reach the desired position. This characteristic makes queues unsuitable for scenarios that require frequent removal of elements from arbitrary positions.

Q8. How can we efficiently search for an element in a queue?

FIFO queues are not designed for efficient searching. If searching is a requirement, it is often more appropriate to use other data structures like arrays or linked lists that offer better search capabilities, such as indexing or hashing.

Q9. Can a queue contain duplicate elements?

Yes, a queue can contain duplicate elements. Each element in the queue is treated independently, and there are no restrictions on duplicates.

Conclusion

In conclusion, FIFO data structure are a fundamental concept in computer science and programming. They offer a systematic way of organizing data, ensuring that the order of insertion is preserved during processing. Whether it’s managing tasks in an operating system or handling customers in a queue, FIFO queues find numerous applications in real-life scenarios.

By understanding the principles and operations of queue first in first out, you can effectively utilize them in your programs and systems. Whether you choose to implement a queue using an array or a linked list, the advantages of FIFO queues, such as order preservation and efficient operations, make them a valuable tool in your programming toolkit.

So, the next time you’re waiting in line, remember the fascinating world of FIFO queues working behind the scenes, ensuring fairness and orderliness in various domains.

To learn how stack – a LIFO-based data structure works, click on the link below:

Unlock the Power of Stack LIFO: Understanding Data Structures